Based on user experiences shared across various review platforms and their focus areas, here is a list of the top 10 open-source log analysis tools to help you streamline log ingestion, visualize ad-hoc logs, and improve overall observability.

These open-source log trackers and analysis tools are compatible with several log sources/operating systems and are highly regarded for their usability, and community support.

*See the description of the categories.

Tool selection & sorting:

- GitHub stars: 50+

- GitHub contributors: 20+

- Sorting: Tools are sorted based on GitHub stars in descending order for the log management category, followed by others based on the number of GitHub stars.

Pricing

Disclaimer: Insights (below) come from user experiences shared in Reddit, and G2.

Nagios

Nagios is a host/service/network monitoring application developed in C and licensed under the General Public License.

Its main product is a log server designed to streamline data gathering and make information more available to system administrators. The Nagios log server engine collects data in real-time and feeds it into a search tool.

The current version of Nagios is compatible with servers running Microsoft Windows, Linux, and Unix. A built-in setup wizard, allows you to integrate with a new endpoint or application.

Key features:

- Monitoring features:

- Monitoring network services (e.g., SMTP, POP3, HTTP, PING).

- Monitor host resources (processor load, disk utilization, etc.).

- A plugin interface that allows for user-created service monitoring methods.

- Log management features:

- Automated log file rotation and archiving.

- Filtering for log data based on its geographic origin. This means you may use mapping technologies to create dashboards that show how your traffic flows.

- Optional online interface for viewing current network status, log files, and other information.

Fluentd

Fluentd is an open-source data-gathering software project that can process logs by adding context, changing their structure, and sending them to log storage.

Fluentd receives events from several data sources and sends them to files, RDBMS, NoSQL, IaaS, SaaS, and Hadoop. Fluentd offers 500+ community-contributed plugins that connect several data sources and outputs (e.g. log management or big data management systems).

Data sources:

- Application logs: php, node®, RAILS, Java, Ruby, Ruby on Rails, Python, PHP, Perl, Node.js, Scala.

- Network protocols: N, Microsoft, .NET, UNIX®, TCP/IP, Syslog

- loT Devices: RasperryPi

- Others: Docker, Kafka, PostgreSQL Slow Query Log, etc.

The cost of the paid version of Fluentd comes under the Fluent Commerce plan. In the AWS Marketplace, the price varies according to order volume and inventory update velocity. For example, a 36-month contract with Fluent Commerce could cost $300,000.

Graylog

Graylog is a free and open log management platform and security information and event management (SIEM) system. It is specifically built to collect data from a variety of sources, allowing you to centralize, protect, and monitor your log data. Graylog can perform a variety of cyber security tasks, including:

Enterprise edition: It is suitable for enterprise use since it can be deployed across large clusters, ingesting millions of logs per second. The enterprise version includes additional features, such as:

- User authentication

- Teams management

- Configurable reporting

Key considerations:

Feature-rich for log analysis:

- Handles syslog from devices like Cisco and pfSense effectively.

- Offers features like alerting, dashboard creation, and long-term log retention for compliance purposes.

- Works with tools like Filebeat to handle detailed log outputs, such as those from Suricata or HAProxy.

- Support for custom logs.

Ease of use:

- Can process and extract specific log fields using extractors and pipelines.

- Intuitive dashboard creation makes it suitable for operational monitoring.

- Offers integrations like AD for user management (though some features, like role-based LDAP access).

Syslog-ng

Syslog-ng is an open-source log management program that provides a versatile solution for collecting, analyzing, and storing logs.

It enables you to collect data from several sources, then parse, classify, rewrite, and correlate the logs into a single format before storing or transferring them to other systems such as Apache Kafka or Elasticsearch.

With Syslog-ng logs can be:

- Classified and structured using built-in parsers (csv-parser).

- Stored in files, message queues (e.g. AMQP), or databases (e.g. PostgreSQL, MongoDB).

- Forwarded to big data technologies (such as Elasticsearch, Apache Kafka, or Apache Hadoop).

Key features:

- Plugins written in C, Python, Java, Lua, or Perl. S

- Support for several message formats, including RFC3164, RFC5424, JSON.

- Linux, Solaris, and BSD operating system support.

- UDP, TCP, TLS, and RELP log transport protocols support.

- TCP and TLS encryption.

- Automated log archiving for 500+k log messages.

Elastic Stack (ELK Stack) – Logstash

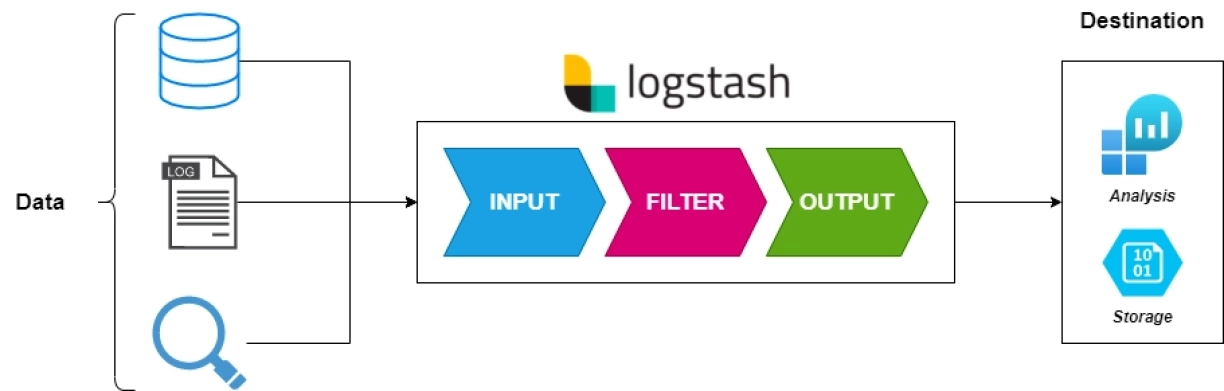

Elastic Stack is a group of Open Source products, its main products are Elasticsearch, Kibana, and Logstash.

Logstash serves as a backend server in the Elasticsearch database. It may collect and process logs from several sources and send them to several destinations, including the Elasticsearch engine, or files.

Logstash does not include a built-in dashboard for viewing logs. However, it may be with other tools such as SigNoz to generate and share log data visualizations and dashboards.

Distinct feature: ELK Stack’s distinct feature is the ability to monitor apps built on open-source WordPress installations. In contrast to most out-of-the-box security audit log products, which only track admin and PHP logs, ELK Stack can search web server and database logs.

Prometheus

Prometheus is a metrics monitoring system that runs on cloud-native environments like Kubernetes. It operates as a service that scrapes endpoints and records the metrics in a time-series database.

These endpoints are supposed to deliver metrics in the format you indicated, also known as the ‘OpenMetrics’ standard. You can generate metrics from logs (for example, counting specific categories of log lines or extracting numbers from lines and using mathematical aggregation methods).

Prometheus outputs information in a structured key/value format.

Some keys are consistently present in a logline:

- ts: The timestamp at which the line was recorded.

- Caller: The source code file containing the logging statement.

- level: The severity of the log message (debug, info, warn, or error).

Example: Standard error logs: When you run Prometheus in a terminal, something similar to the following will be logged:

Configuring logging: By default, Prometheus only produces log lines with a severity level of info or above. This means that debug-level log statements will not be visible. To change this behavior, you can use the –log. level toggle to set the minimum visible log level.

For example, to see debug log lines, you may launch Prometheus as follows:

This would result in loud debug output, therefore you should probably avoid running Prometheus with a debug log level in normal operation. However, temporarily turning this on can help pinpoint specific problems.

Grafana – Loki

Grafana Loki is a multi-tenant log aggregation system created by Grafana Labs. It is a strong voice for organizations that looking to unify logs, metrics, and traces using tools like Grafana. Loki differs from Prometheus by focusing on logs instead of metrics.

Loki focuses on indexing metadata (labels) rather than entire log contents. It uses label-based indexing, which indexes logs based on associated key-value pairs (labels). This strategy considerably decreases storage requirements and speeds up the ingesting of massive log volumes.

Log searches: Furthermore, retrieval of log data is faster because it simply searches via labels rather than full texts. However, this design choice limits full-text search capabilities. Thus, Loki can search within labels, but it cannot perform arbitrary searches throughout the full log content.

SigNoz

SigNoz is an open-source observability and application performance monitoring (APM) platform built on OpenTelemetry that includes logs, traces, and metrics in a single application. It is an open-source alternative to DataDog, NewRelic.

Key information regarding SigNoz:

- Unified observability: Logs, metrics, and traces from your applications are collected in one location, allowing for faster data correlation and analysis to identify problems.

- Open telemetry-based: Utilizes the OpenTelemetry standard for instrumentation, allowing you to integrate with several languages and frameworks while avoiding vendor lock-in.

- Application performance management (APM): Can be used to track the performance of your applications, including bottlenecks, and errors.

Lnav

The Logfile Navigator is a log file viewer for the terminal. The below figure exhibits a combination of syslog and web access log files. Failed queries are displayed in red.

Given a set of log files/directories, Lnav can:

- detect their format;

- merge the files by time into a single view

- tail the files, follow renames, find new files in directories

- build an index of errors

- encode logs as JSON lines.

Angle-grinder

Angle-grinder lets you parse, aggregate, sum, average, and sort your data. Angle-grinder is created for situations in which you do not have your data in graphite//kibana/sumo logic/Splunk/etc, but still need to perform complex analytics.

Angle-grinder can process 1+ million rows per second (with simple pipelines up to 5 million), making them suitable for log aggregation at scale. As data is processed, your terminal will display a live update of the results.

Open-source log analysis tools enable users to collect, process, store, search, and analyze log data from various sources, such as servers, applications, and network devices. These tools can help SecOps, ITOps, and DevOps to:

Description of the categories

Log management

This category systematically handles log data, including ingestion, storage, parsing, and querying. Log management tools are used for centralizing logs from multiple sources, making it easier to search and analyze system and application logs for debugging, troubleshooting, and compliance.

Enterprise monitoring

Enterprise monitoring tools go beyond traditional log management by providing comprehensive insights into the performance and health of large-scale IT environments. These tools integrate logs with performance metrics, application traces, and infrastructure data to give a unified view of enterprise operations.

Enterprise monitoring for logs

A specialized focus under enterprise monitoring, these tools emphasize log collection and analysis within enterprise environments. They are optimized for scalability and cater to organizations needing detailed log analytics to handle massive amounts of data across distributed systems.

Observability tools

Observability tools combine metrics, logs, and traces to offer holistic insights into system behavior. These tools provide deep visibility into application performance and dependencies, enabling developers and operators to diagnose complex issues efficiently.

Log file navigator

These tools provide interfaces or command-line utilities to navigate through log data quickly. They are valuable for identifying patterns, filtering specific entries, and troubleshooting localized issues.

Command line log analysis

This scope refers to tools or utilities designed for log analysis directly from the command line. They are lightweight, efficient, and preferred by developers or sysadmins for quick analysis tasks, offering command line log filtering, and searching capabilities.

For guidance on choosing the right tool or service, check out our data-driven sources: log analysis software.