As AI advances, the access of the research community to generative AI powered tools such as language models is important for making innovations. However, today’s AI models often reside behind proprietary walls, hindering innovation. Meta’s release of LLaMA 2 is set to democratize this space, empowering researchers and commercial users worldwide to explore and push the boundaries of what AI can achieve.

In this article, we explain the Meta LLaMa model and its latest version LLaMa 2.

What is LLaMa?

In February 2023, Meta announced LLaMA, which stands for Large Language Model Meta Artificial Intelligence. This large language model (LLM) has been trained on various model sizes, ranging from 7 billion to 65 billion parameters. The LLaMa models change due to parameter sizes1:

- 7B parameters (trained on 1 trillion tokens)

- 13B parameters

- 33B parameters (trained on 1.4 trillion tokens)

- 65B parameters (trained on 1.4 trillion tokens)

Meta AI states that LLaMa is a smaller language model which can be more suitable for retraining and fine tuning. This is a benefit because fine tuned models are more suitable for profit entities and specific usages.

For fine tuning of LLMs for enterprise purposes, take a look at our guide.

Unlike many powerful large language models that are typically only available via restricted APIs, Meta AI has chosen to make LLaMA’s model weights accessible to the researching AI community under a noncommercial license. The access was initially provided selectively to academic researchers, individuals linked with government institutions, civil society organizations, and academic institutions worldwide.

How was LLaMa trained?

Similar to other large language models, LLaMA operates by receiving a string of words as input and anticipating the next word to iteratively produce text.

The training of this language model prioritized text from the top 20 languages with the highest number of speakers, particularly those using the Latin and Cyrillic scripts.

The training data of LLaMa is mostly from large public websites and forums such as2:

- Webpages scraped by CommonCrawl

- Open source repositories of source code from GitHub

- Wikipedia in 20 different languages

- Public domain books from Project Gutenberg

- The LaTeX source code for scientific papers uploaded to ArXiv

- Questions and answers from Stack Exchange websites

How does LLaMa perform compared to other large language models?

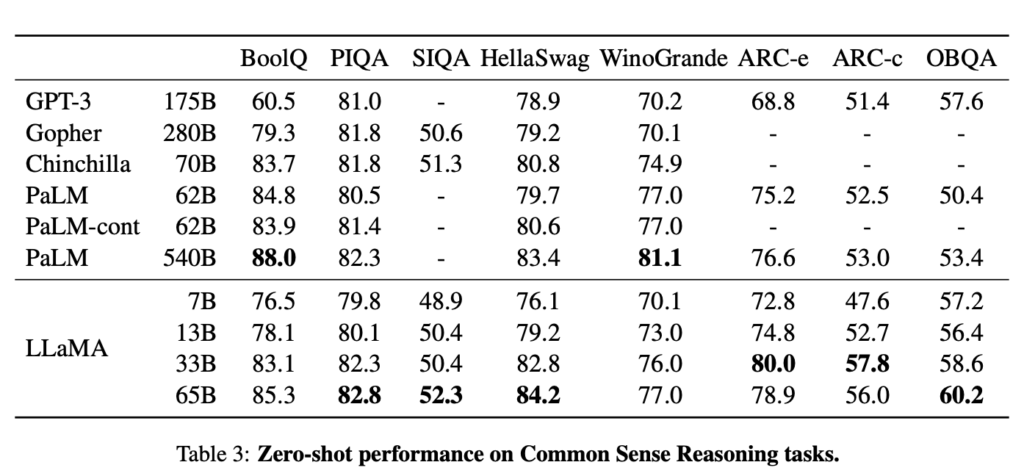

According to the creators of LLaMA, the model with 13 billion parameters outperforms GPT-3 (which has 175 billion parameters) on most Natural Language Processing (NLP) benchmarks.3 Furthermore, their largest model competes effectively with top-tier models like PaLM and Chinchilla.

Truthfulness & bias

- LLaMa performs better than GPT-3 in the truthfulness test used in both LLMs performance measurement. However, as the results show, LLMs still need improvement in terms of truthfulness.

- LLaMa with 65B parameters produces less biased prompts compared to other big LLMs like GPT3.

What is LLaMa 2?

On 18th of July 2023, Meta and Microsoft jointly announced their support for the LLaMa 2 family of large language models on the Azure and Windows platforms.4 Both Meta and Microsoft are united in their commitment to democratizing AI and making AI models widely accessible, and Meta is adopting an open stance with LlaMa 2. For the first time, the model is opened for research and commercial use.

The design of LLaMa 2 is meant to help developers and organizations in creating generative AI tools and experiences. They give developers the freedom to choose the kinds of models they want to develop, endorsing both open and frontier models.

Who can use LLaMa 2?

- Customers of Microsoft’s Azure platform can fine-tune and use the 7B, 13B, and 70B-parameter LLaMa 2 models.

- Also, it is accessible through Amazon Web Services, Hugging Face, and other providers.5

- LLaMa will be designed to operate efficiently on a local Windows environment. Developers working with Windows can utilize LlaMa by directing it to the DirectML execution provider via the ONNX Runtime.

If you have questions or need help in finding vendors, don’t hesitate to contact us:

- “Introducing LLaMA: A foundational, 65-billion-parameter language model.” Meta AI, 24 February 2023, https://ai.facebook.com/blog/large-language-model-llama-meta-ai/. Accessed 24 July 2023.

- “LLaMA.” Wikipedia, https://en.wikipedia.org/wiki/LLaMA. Accessed 24 July 2023.

- “LLaMA: Open and Efficient Foundation Language Models.” arXiv, 13 June 2023, https://arxiv.org/pdf/2302.13971.pdf. Accessed 24 July 2023.

- “Microsoft and Meta expand their AI partnership with LLama 2 on Azure and Windows – The Official Microsoft Blog.” The Official Microsoft Blog, 18 July 2023, https://blogs.microsoft.com/blog/2023/07/18/microsoft-and-meta-expand-their-ai-partnership-with-llama-2-on-azure-and-windows/. Accessed 24 July 2023.

- “Meta and Microsoft Introduce the Next Generation of Llama.” Meta AI, 18 July 2023, https://ai.meta.com/blog/llama-2/. Accessed 24 July 2023.

Share on LinkedIn