Masked diffusion has emerged as a promising alternative to autoregressive models for the generative modeling of discrete data. Despite its potential, existing research has been constrained by overly complex model formulations and ambiguous relationships between different theoretical perspectives. These limitations have resulted in suboptimal parameterization and training objectives, often requiring ad hoc adjustments to address inherent challenges. Diffusion models have rapidly evolved since their inception, becoming a dominant approach for generative media and achieving state-of-the-art performance across various domains. Significant breakthroughs have been particularly notable in image synthesis, audio generation, and video production, demonstrating the transformative potential of this innovative modeling technique.

The researchers from Google DeepMind focus on masked (or absorbing) diffusions, a discrete diffusion framework introduced in Structured Denoising Diffusion Models in Discrete State-Spaces, and subsequently explored from multiple perspectives. By adopting a continuous-time approach that has been instrumental in advancing continuous state space diffusions, the study aims to enhance the understanding and performance of discrete data generation models. The research presents several key technical contributions designed to simplify model training and significantly improve performance. The primary objectives include establishing robust properties of the forward process, developing a simplified Evidence Lower Bound (ELBO) expression, and creating a unified theoretical framework that critically examines existing continuous-time discrete diffusion models.

The researchers introduce a unique approach to masked diffusion within a finite discrete state space. By augmenting the original state space with an additional mask state, they define a forward “masking” process that transforms data points into a mask state at random times. The discrete-time framework divides the interval [0, 1] into discrete segments, with a transition matrix governing state changes. Each transition probability determines whether a state remains unchanged or jumps to the mask state. By taking the limit of this discrete process, the researchers develop a continuous-time forward process that enables more sophisticated modeling of data evolution. This approach provides a flexible and mathematically rigorous method for the generative modeling of discrete data.

The researchers develop a generative model by defining a reverse process that approximately reverses the forward transitions. They introduce a mean-parameterization approach where a neural network predicts the probability distribution of the original data point. The model uses a softmax-applied neural network to generate probability vectors, with a unique constraint that the mask state cannot be predicted as the clean data. The objective function is derived as an ELBO, which provides a lower bound of the log marginal likelihood. By taking a continuous-time limit, the researchers demonstrate that the objective can be expressed as an integral of cross-entropy losses. Importantly, they show that the objective exhibits invariance properties similar to continuous state-space diffusion models, with the signal-to-noise ratio playing a crucial role in the formulation.

Researchers explore sampling strategies for their discrete-time reverse process, focusing on generation and conditional generation techniques. They discover that ancestral sampling yields slightly higher sample quality compared to alternative methods like Euler discretization. For conditional generation tasks such as infilling, they recommend keeping conditioning tokens unmasked throughout the generation process. A critical finding involves the impact of time discretization on sample quality, particularly when using different masking schedules. By switching from a linear to a cosine schedule, they dramatically improved the Fréchet Inception Distance (FID) score on ImageNet 64×64 from 70 to 17 using 256 steps. The researchers hypothesize that the cosine schedule’s success stems from its ability to utilize information redundancy, making remaining tokens more predictable and reducing unmasking conflicts during generation.

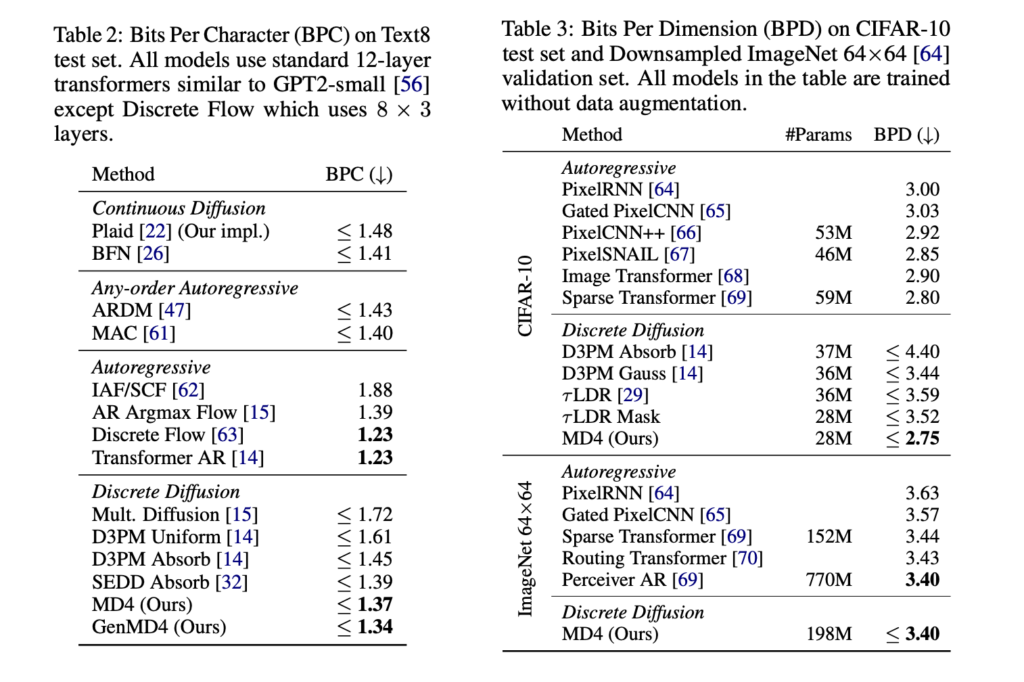

By conducting comprehensive experiments on text and image modeling to validate their masked diffusion approach. For text experiments, researchers utilized two datasets: text8 (character-level text from Wikipedia) and OpenWebText. They introduced two model variants: MD4 (Masked Discrete Diffusion for Discrete Data) and GenMD4 (generalized state-dependent model). On OpenWebText, their GPT-2 small and medium models outperformed previous discrete diffusion models across five benchmark datasets, demonstrating superior zero-shot perplexity performance. The models consistently achieved better results than GPT-2, with particularly strong performance across tasks like WikiText2, Penn Treebank, and One Billion Words. Notably, the researchers observed faster model convergence and more stable training compared to previous approaches.

To sum up, this study emphasizes the key contributions of the masked diffusion approach proposed by the researchers. They address the complexity and accessibility challenges in existing masked diffusion models by developing a flexible continuous-time formulation with a remarkably simple Evidence Lower Bound expression. By presenting a weighted integral of cross-entropy losses, they simplify the optimization process that previously hindered model performance. The researchers introduced two model variants: MD4 and GenMD4, with the latter offering a state-dependent masking schedule. Their experimental results demonstrate significant improvements across different domains. On text data, MD4 outperformed existing discrete and continuous diffusion models, while in pixel-level image modeling, the approach achieved competitive likelihoods comparable to continuous diffusion models and surpassed similar-sized autoregressive models. The generalized model, GenMD4, further enhanced likelihood performance, showcasing the potential of state-dependent diffusion techniques.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.