Leveraging multiple LLMs concurrently demands significant computational resources, driving up costs and introducing latency challenges. In the evolving landscape of AI, efficient LLM orchestration is essential for optimizing performance while minimizing expenses.

Explore key strategies and tools for managing multiple LLMs effectively.

Here is a list of LLM orchestration tools sorted according to the number of GitHub stars.

What is orchestration in LLM?

LLM Orchestration refers to the coordinated management and integration of multiple Large Language Models (LLMs) to perform complex tasks effectively. It ensures interaction between multiple models, workflows, data sources and pipelines, ensuring the optimal performance as a unified system. Organizations increasingly rely on LLM Orchestration for applications such as natural language generation, machine translation, decision-making, and chatbots.

While LLMs possess strong foundational capabilities, they are limited in real-time learning, retaining context, and solving multistep problems. Also, managing multiple LLMs across various provider APIs adds orchestration complexity.

LLM orchestration frameworks address these challenges by streamlining prompt engineering, API interactions, data retrieval, and state management. These frameworks enable LLMs to collaborate efficiently, enhancing their ability to generate accurate and context-aware outputs.

What is the best platform for LLM orchestration?

LLM orchestration frameworks are tools designed to manage, coordinate, and optimize the use of Large Language Models (LLMs) in various applications. An LLM orchestration system enables seamless integration with different AI components, facilitate prompt engineering, manage workflows, and enhance performance monitoring.

They are particularly useful for applications involving multi-agent systems, retrieval-augmented generation (RAG), conversational AI, and autonomous decision-making.

The tools that are explained below are listed based on the alphabetical order:

Agency Swarm

Agency Swarm is a scalable Multi-Agent System (MAS) framework that provides tools for building distributed AI environments.

Key features:

- Supports large-scale multi-agent coordination that enables many AI agents to work together efficiently.

- Includes simulation and visualization tools that helps test and monitor agent interactions in a simulated environment.

- Enables environment-based AI interactions as AI agents can dynamically respond to changing conditions.

AutoGen

AutoGen, developed by Microsoft, is an open-source multi-agent orchestration framework that simplifies AI task automation using conversational agents.

Key features:

- Multi-agent conversation framework that allows AI agents to communicate and coordinate tasks.

- Supports various AI models (OpenAI, Azure, custom models) that works with different LLM providers.

- Modular and easy-to-configure system referring to a customizable setup for various AI applications.

crewAI

crewAI is an open-source multi-agent framework built on LangChain. It enables role-playing AI agents to collaborate on structured tasks.

Key features:

- Agent-based workflow automation that assigns AI agents specific roles in task execution.

- Supports both technical and non-technical users

- Enterprise version (crewAI+) available

Haystack

Haystack is an open-source Python framework that allows for flexible AI pipeline creation using a component-based approach. It supports information retrieval and Q&A applications.

Key features:

- Component-based AI system design which is a modular approach for assembling AI functions.

- Integration with vector databases and LLM providers enabling to work with various data storage and AI models.

- Supports semantic search and information extraction, enabling advanced search and knowledge retrieval.

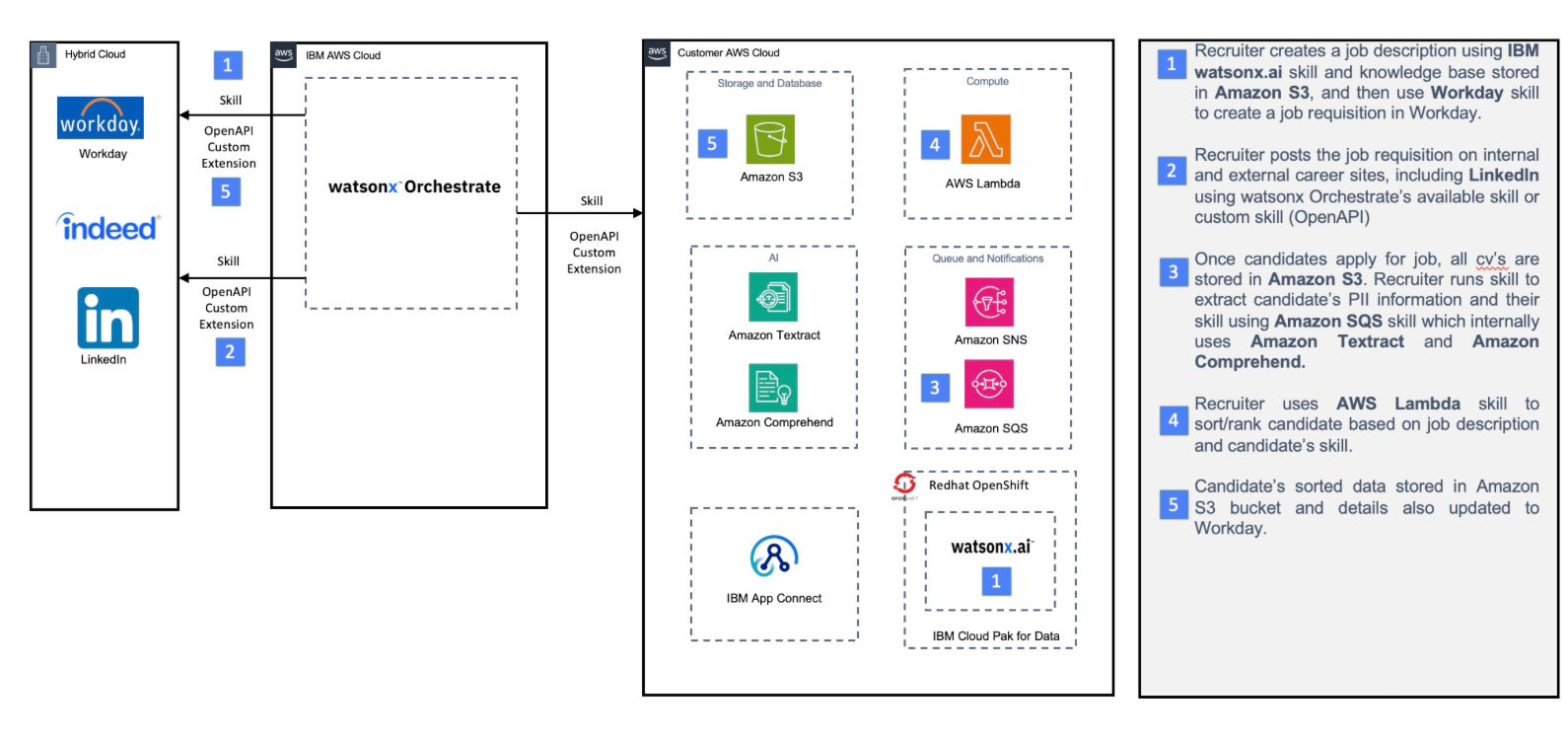

IBM watsonx orchestrate

IBM watsonx orchestrate is a proprietary AI orchestration framework that leverages natural language processing (NLP) to automate enterprise workflows. It includes prebuilt AI applications and tools designed for HR, procurement, and sales operations.

Key features:

- AI-powered workflow automation that can automate repetitive business processes using AI.

- Prebuilt applications and skill sets, providing ready-to-use AI tools for different industries.

- Enterprise-focused integration, connecting with existing enterprise software and workflows.

LangChain

LangChain is an open-source Python framework for building LLM applications, focusing on tool augmentation and agent orchestration. It provides interfaces for embedding models, LLMs, and vector stores.

Key features:

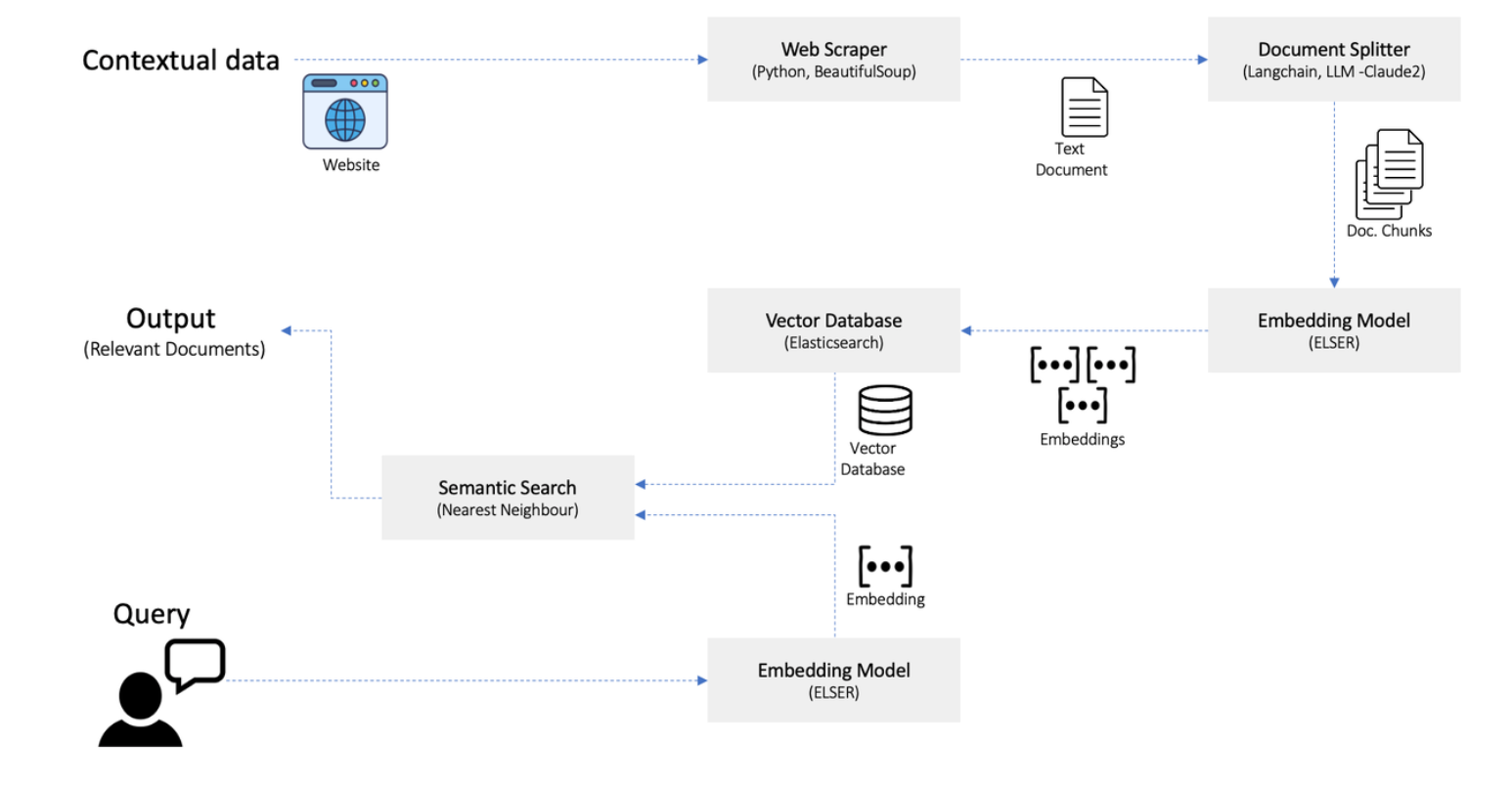

- RAG support

- Integration with multiple LLM components

- ReAct framework for reasoning and action

LlamaIndex

LlamaIndex is an open-source data integration framework designed for building context-augmented LLM applications. It enables easy retrieval of data from multiple sources.

Key features:

- Data connectors for over 160 sources, allowing AI to access diverse structured and unstructured data.

- Retrieval-Augmented Generation (RAG) support

- Suite of evaluation modules for performance tracking

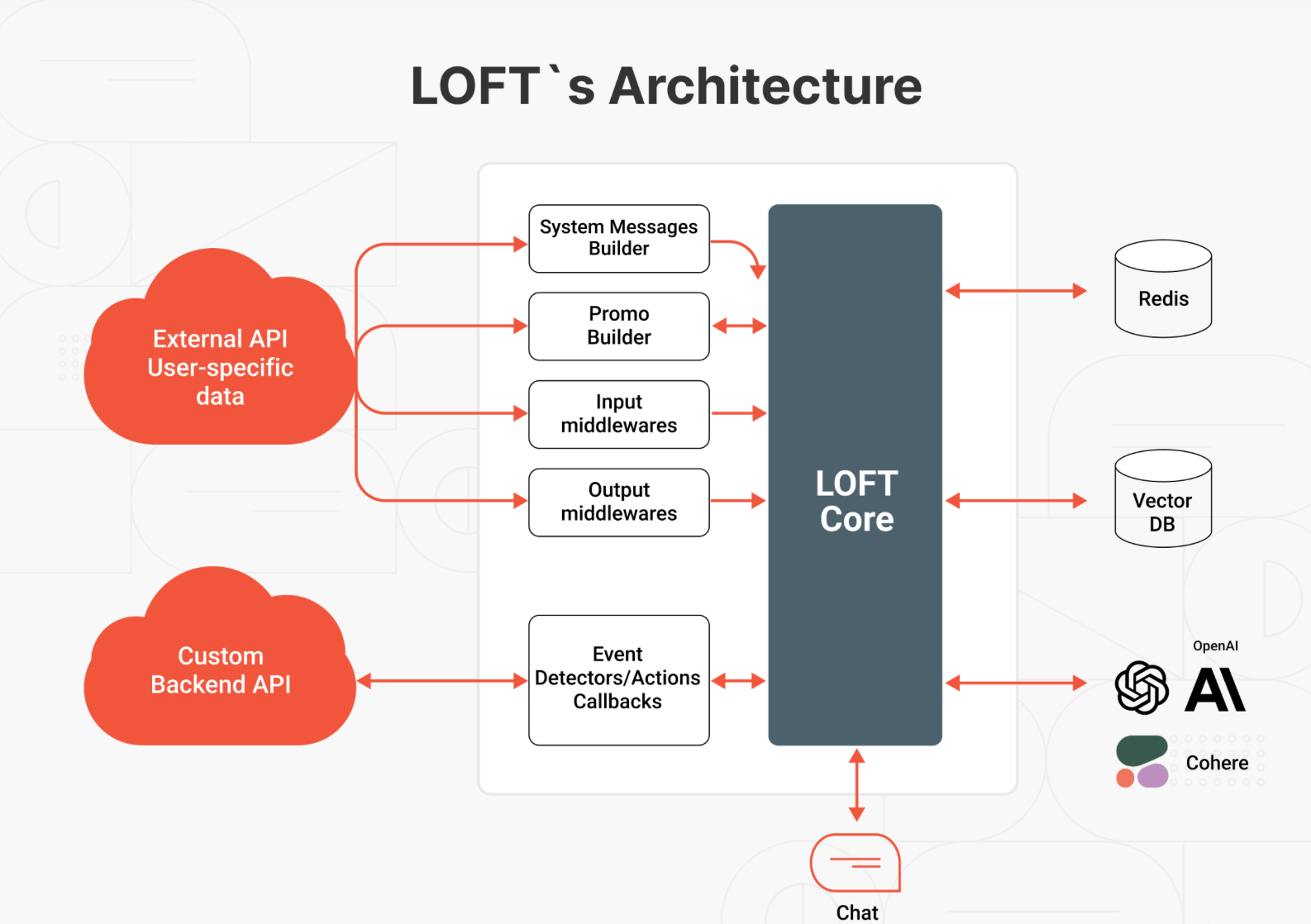

LOFT

LOFT, developed by Master of Code Global, is a Large Language Model-Orchestrator Framework designed to optimize AI-driven customer interactions. Its queue-based architecture ensures high throughput and scalability, making it suitable for large-scale deployments.

Key features:

- Framework agnostic: Integrates into any backend system without dependencies on HTTP frameworks.

- Dynamically computed prompts: Supports custom-generated prompts for personalized user interactions.

- Event detection & handling: Advanced capabilities for detecting and managing chat-based events, including handling hallucinations.

Microchain

Microchain is a lightweight, open-source LLM orchestration framework known for its simplicity but is not actively maintained.

Key features:

- Chain-of-thought reasoning support that helps AI break down complex problems step by step.

- Minimalist approach to AI orchestration

Semantic Kernel

Semantic Kernel (SK) is an open-source AI orchestration framework by Microsoft. It helps developers integrate large language models (LLMs) like OpenAI’s GPT with traditional programming to create AI-powered applications.

Key features:

- Memory & context handling: SK allows storage and retrieval of past interactions, helping maintain context over conversations.

- Embeddings & vector search: Supports embedding-based searches, making it great for retrieval-augmented generation (RAG) use cases.

- Multi-modal support: Works with text, code, images, and more.

TaskWeaver

TaskWeaver is an experimental open-source framework designed for coding-based task execution in AI applications. It prioritizes modular task decomposition.

Key features

- Modular design for decomposing tasks that breaks down complex processes into manageable AI-driven steps.

- Declarative task specification, allowing tasks to be defined in a structured format.

- Context-aware decision-making, allowing AI to adapt its actions based on changing inputs.

LLM orchestration frameworks manage the interaction between different components of LLM-driven applications, ensuring structured workflows and efficient execution. The orchestration layer plays a central role in coordinating processes such as prompt management, resource allocation, data preprocessing, and model interactions.

Orchestration Layer

The orchestration layer acts as the central control system within an LLM-powered application. It manages interactions between various components, including LLMs, prompt templates, vector databases, and AI agents. By overseeing these elements, orchestration ensures cohesive performance across different tasks and environments.

Key Orchestration Tasks

Prompt chain management

- The framework structures and manages LLM inputs (prompts) to optimize output.

- It provides a repository of prompt templates, allowing for dynamic selection based on context and user inputs.

- It sequences prompts logically to maintain structured conversation flows.

- It evaluates responses to refine output quality, detect inconsistencies, and ensure adherence to guidelines.

- Fact-checking mechanisms can be implemented to reduce inaccuracies, with flagged responses directed for human review.

LLM resource and performance management

- Orchestration frameworks monitor LLM performance through benchmark tests and real-time dashboards.

- They provide diagnostic tools for root cause analysis (RCA) to facilitate debugging.

- They allocate computational resources efficiently to optimize performance.

Data management and preprocessing

- The orchestrator retrieves data from specified sources using connectors or APIs.

- Preprocessing converts raw data into a format compatible with LLMs, ensuring data quality and relevance.

- It refines and structures data to enhance its suitability for processing by different algorithms.

LLM integration and interaction

- The orchestrator initiates LLM operations, processes the generated output, and routes it to the appropriate destination.

- It maintains memory stores that enhance contextual understanding by preserving previous interactions.

- Feedback mechanisms assess output quality and refine responses based on historical data.

Observability and security measures

- The orchestrator supports monitoring tools to track model behavior and ensure output reliability.

- It implements security frameworks to mitigate risks associated with unverified or inaccurate outputs.

Additional Enhancements

Workflow integration

- Embeds tools, technologies, or processes into existing operational systems to improve efficiency, consistency, and productivity.

- Ensures smooth transitions between different model providers while maintaining prompt and output quality.

Changing model providers

- Some frameworks allow switching model providers with minimal changes, reducing operational friction.

- Updating provider imports, adjusting model parameters, and modifying class references facilitate seamless transitions.

Prompt management

- Maintains consistency in prompting while helping users iterate and experiment more productively.

- Integrates with CI/CD pipelines to streamline collaboration and automate change tracking.

- Some systems automatically track prompt modifications, helping catch unexpected impacts on prompt quality.

Why is LLM orchestration important in real-time applications?

LM Orchestration enhances the efficiency, scalability, and reliability of AI-driven language solutions by optimizing resource utilization, automating workflows, and improving system performance. Key benefits include:

- Better decision-making: Aggregates insights from multiple LLMs, leading to more informed and strategic decision-making.

- Cost efficiency: Optimizes costs by dynamically allocating resources based on workload demand.

- Enhanced efficiency: Streamlines LLM interactions and workflows, reducing redundancy, minimizing manual effort, and improving overall operational efficiency.

- Fault tolerance: Detects failures and automatically redirects traffic to healthy LLM instances, minimizing downtime and maintaining service availability.

- Improved accuracy: Leverages multiple LLMs to enhance language understanding and generation, leading to more precise and context-aware outputs.

- Load balancing: Distributes requests across multiple LLM instances to prevent overload, ensuring reliability and improving response times.

- Lowered technical barriers: Enables easy implementation without requiring AI expertise, with user-friendly tools like LangFlow simplifying orchestration.

- Dynamic resource allocation: Allocates CPU, GPU, memory, and storage efficiently, ensuring optimal model performance and cost-effective operation.

- Risk mitigation: Reduces failure risks by ensuring redundancy, allowing multiple LLMs to back up one another.

- Scalability: Dynamically manages and integrates LLMs, allowing AI systems to scale up or down based on demand without performance degradation.

- Seamless integration: Supports interoperability with external services, including data storage, logging, monitoring, and analytics.

- Security & compliance: Centralized control and monitoring ensure adherence to regulatory standards, enhancing sensitive data security and privacy.

- Version control & updates: Facilitates seamless model updates and version management without disrupting operations.

- Workflow automation: Automates complex processes such as data preprocessing, model training, inference, and postprocessing, reducing developer workload.

FAQs

What is LLM in AI?

A Large Language Model (LLM) is an advanced AI system designed to process and generate human-like text. It is trained on vast datasets using deep learning techniques, particularly transformers, to understand language patterns, context, and semantics. LLMs can answer questions, summarize content, generate text, and even engage in conversations. They are used in chatbots, virtual assistants, content creation, and coding assistance. OpenAI’s GPT models, Google’s Gemini, and Meta’s LLaMA are examples. LLMs continue to evolve, enhancing AI-driven applications in industries like healthcare, law, and customer service.

LLM components

- LLM Model: A Large Language Model (LLM) processes vast amounts of data to understand and generate human-like text. Open-source models offer flexibility, while closed-source ones provide ease of use and support. General-purpose LLMs handle various tasks, while domain-specific models cater to specialized industries.

- Prompts: Effective prompts guide LLM responses.

- Zero-shot prompts: Generate responses without prior examples.

- Few-shot prompts: Use a few samples to refine accuracy.

- Chain-of-thought prompts: Encourage logical reasoning for better responses.

- Vector Database: Stores structured data as numerical vectors. LLMs use similarity searches to retrieve relevant context, improving accuracy and preventing outdated responses.

- Agents and Tools: Extend LLM capabilities by running web searches, executing code, or querying databases. These enhance AI-driven automation and business solutions.

- Orchestrator: Integrates LLMs, prompts, vector databases, and agents into a cohesive system. Ensures smooth coordination for efficient AI-powered applications.

- Monitoring: Tracks performance, detects anomalies, and logs interactions. Ensures high-quality responses and helps mitigate errors in LLM outputs.

What is an example of a LLM?

One popular example of an LLM is GPT-4, developed by OpenAI. GPT-4 is a multimodal AI model capable of understanding and generating human-like text with remarkable accuracy. It can summarize information, answer complex questions, assist with coding, and create conversational agents. Businesses use GPT-4 for customer support, content generation, and automation. Other examples include Google’s Gemini, Meta’s LLaMA, and Anthropic’s Claude. These models improve efficiency across various industries, from marketing and education to software development. As LLMs advance, they continue to reshape how humans interact with AI-powered technologies.