Who doesn’t love music? Have you ever remembered the rhythm of a song but not the lyrics and can’t figure out the song’s name? Researchers at Google and Osaka University together found a way to reconstruct the music from brain activity using functional magnetic resonance imaging (fMRI). Based on one’s genre, instrumentation, and mood, the music is generated.

Researchers at Google and Osaka University use deep neural networks to generate music from features like fMRI scans by predicting high-level, semantically structured music. Based on the activity in the human auditory cortex, different components of the music can be predicted. Researchers experimented with JukeBox, which generated music with high temporal coherence, which consists of predictable artifacts. A compressed neural audio codec at low bitrates with high-quality reconstruction is used to generate high-quality audio.

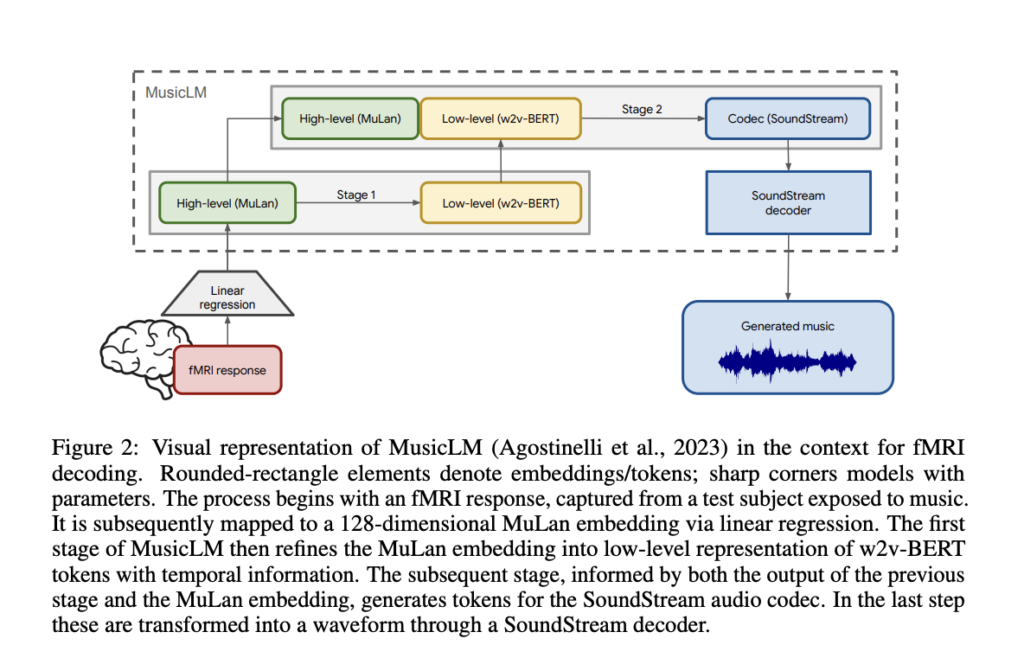

Generating music from fMRI requires intermediate stages, which include music representation by selecting the music embedding. The architecture used by them consisted of music embedding, which represented a bottleneck for subsequent music generation. If the predicted music embedding is close to the music embedding of the original stimulus heard by the subject, MusicLM (music generating model) is used to generate music similar to the original stimulus.

The music-generating model MusicLM consists of audio-derived embeddings named MuLan and w2v-BERT- avg. Out of both embeddings, MuLan tends to have high prediction performance than w2v-BERT-avg in the lateral prefrontal cortex as it captures high-level music information processing in the human brain. Abstract information about music is differently represented in the auditory cortex compared to audio-derived embeddings.

MuLan embeddings are converted into music using generating models. The information which is not contained in the embedding is regained in the model. In the retrieval technique, the reconstruction is also musical as it is directly pulled from a dataset of music. This ensures a higher level of reconstruction quality. Researchers use Linear regression from fMRI response data. This method also has limitations which include uncertainty in the amount of exact information with linear regression from the fMRI data.

Researchers said that their future work includes the reconstruction of music from an individual’s imagination. When a user imagines a music clip, the decoding analysis examines how faithfully the imagination can be reconstructed. This would qualify for an actual mind-reading label. There exist diverse subjects with different musical expertise and it requires multiple reconstruction properties by comparison. Comparing the reconstruction quality between the subjects, which included professional musicians, can provide useful insights into the differences in their perspectives and understanding.

Their research work is just the first step in bringing your pure, imaginative thoughts into existence. This would also lead to generating holograms from just pure imagination in the mind of the subject. Advancement in this field will also provide a quantitative interpretation from a biological perspective.

Check out the Paper and Project Page. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 26k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.