Large Language Models (LLMs), trained on vast amounts of data, have shown remarkable abilities in natural language generation and understanding. General-purpose corpora, comprising a diverse range of online text, are utilized for their training, examples of which are Wikipedia and CommonCrawl. Although these universal models work well on a wide range of tasks, a distributional shift in vocabulary and context causes them to perform poorly in specialized domains.

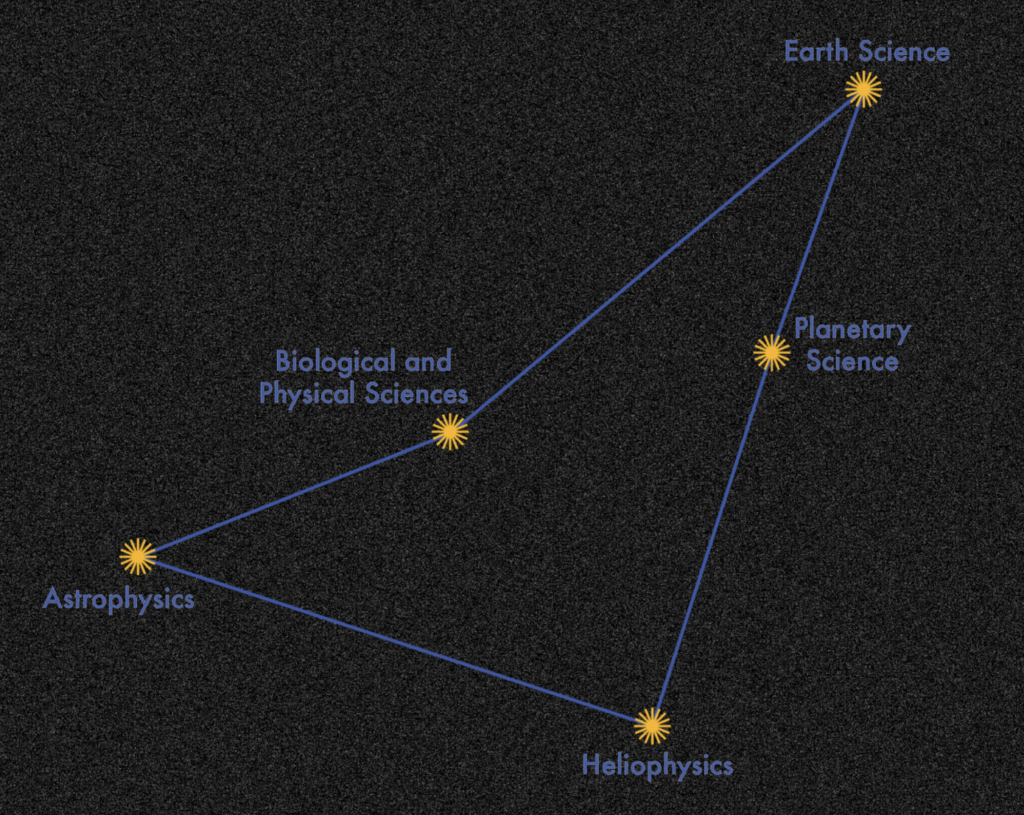

In a recent study, a team of researchers from NASA and IBM collaborated to develop a model that could be applied to Earth sciences, astronomy, physics, astrophysics, heliophysics, planetary sciences, and biology, among other multidisciplinary subjects. Current models such as SCIBERT, BIOBERT, and SCHOLARBERT only partially cover some of these domains. There is no existing model that fully takes into account all these related fields.

To bridge this gap, the team has developed INDUS, a set of encoder-based LLMs specialized in these particular sectors. Since INDUS is trained on carefully selected corpora from various sources, it is guaranteed to cover the body of knowledge in these fields. The INDUS suite includes several types of models to address different needs, which are as follows.

- Encoder Model: This model is trained on domain-specific vocabulary and corpora to excel in tasks related to natural language understanding.

- Contrastive-Learning-Based General Text Embedding Model: This model uses a wide range of datasets from multiple sources to improve performance in information retrieval tasks.

- Smaller Model Versions: These versions are created using knowledge distillation techniques, making them suitable for applications requiring lower latency or limited computational resources.

The team has also produced three new scientific benchmark datasets to advance these interdisciplinary domains’ research.

- CLIMATE-CHANGE NER: A climate change-related entity recognition dataset.

- NASA-QA: A dataset devoted to NASA-related topics used for extractive question answering.

- NASA-IR: A dataset focusing on NASA-related content used for information retrieval tasks.

The team has summarized their primary contributions as follows.

- The byte-pair encoding (BPE) technique has been used to create INDUSBPE, a specialized tokenizer. Because it was built from a carefully selected scientific corpus, this tokenizer can handle the specialized terms and language used in fields like Earth science, biology, physics, heliophysics, planetary sciences, and astrophysics. The INDUSBPE tokenizer improves the model’s comprehension and handling of domain-specific language.

- Using the INDUSBPE tokenizer and the carefully selected scientific corpora, the team has pretrained a number of encoder-only LLMs. Sentence-embedding models have been created by fine-tuning these pretrained models with a contrastive learning objective, which helps in learning universal sentence embeddings.

- More efficient, smaller versions of these models have also been trained using knowledge-distillation techniques, which kept their outstanding performance even in resource-constrained scenarios.

- Three new scientific benchmark datasets have been launched to help expedite research in interdisciplinary disciplines. These include NASA-QA, an extractive question-answering task based on NASA-related themes; NASA-CHANGE NER, an entity recognition task focused on entities connected to climate change; and NASA-IR, a dataset intended for information retrieval tasks inside NASA-related content. The purpose of these datasets is to offer exacting standards for assessing model performance in these particular fields.

- The experimental findings have shown that these models perform well on both the recently created benchmark tasks and the currently used domain-specific benchmarks. They performed better than domain-specific encoders like SCIBERT and general-purpose models like RoBERTa.

In conclusion, INDUS is a big advancement in the field of Artificial Intelligence, giving professionals and researchers in various scientific domains a strong tool that improves their capacity to carry out accurate and effective Natural Language Processing jobs.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.