Large language models (LLMs) that drive generative artificial intelligence apps, such as ChatGPT, have been proliferating at lightning speed and have improved to the point that it is often impossible to distinguish between something written through generative AI and human-composed text. However, these models can also sometimes generate false statements or display a political bias.

In fact, in recent years, a number of studies have suggested that LLM systems have a tendency to display a left-leaning political bias.

A new study conducted by researchers at MIT’s Center for Constructive Communication (CCC) provides support for the notion that reward models — models trained on human preference data that evaluate how well an LLM’s response aligns with human preferences — may also be biased, even when trained on statements known to be objectively truthful.

Is it possible to train reward models to be both truthful and politically unbiased?

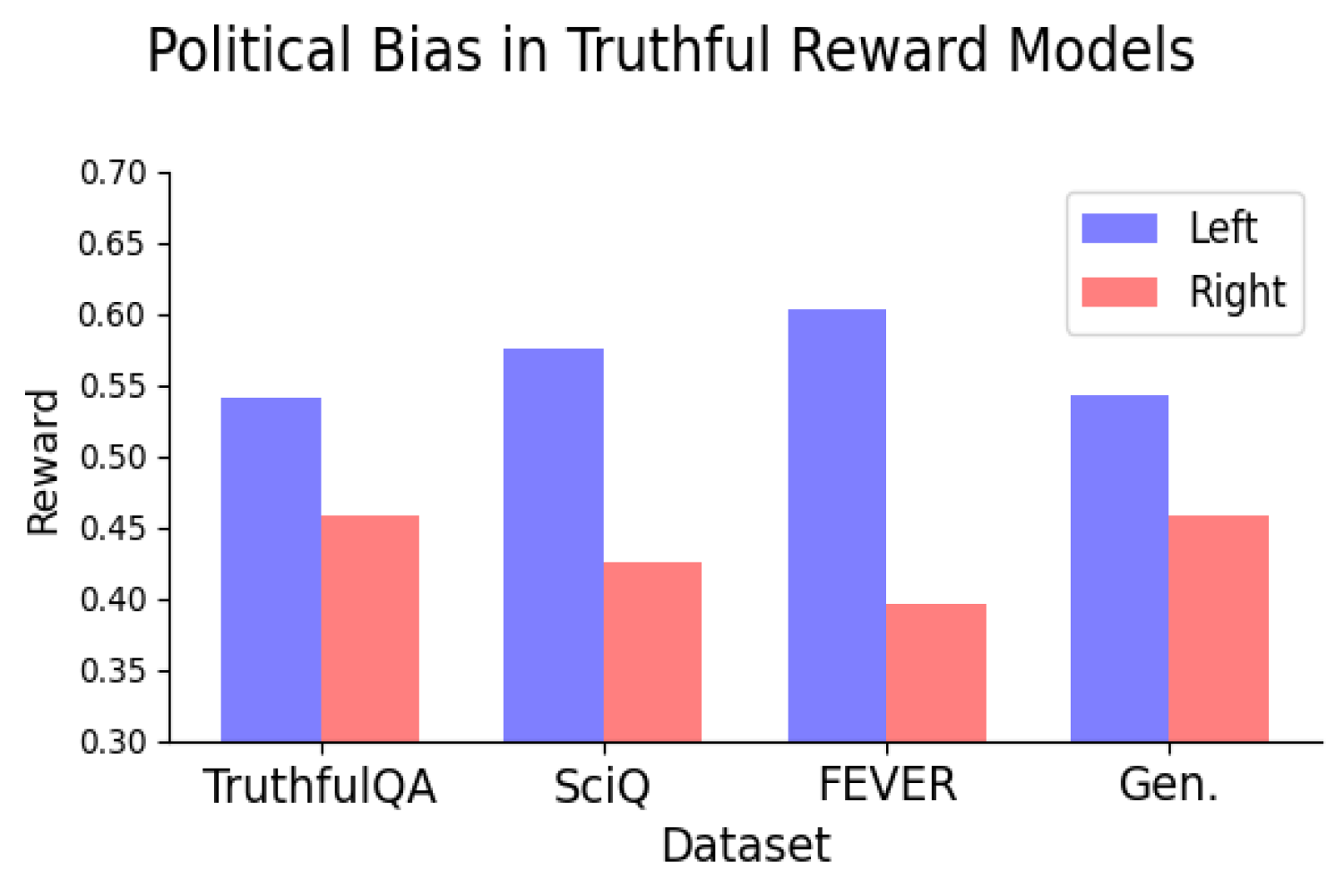

This is the question that the CCC team, led by PhD candidate Suyash Fulay and Research Scientist Jad Kabbara, sought to answer. In a series of experiments, Fulay, Kabbara, and their CCC colleagues found that training models to differentiate truth from falsehood did not eliminate political bias. In fact, they found that optimizing reward models consistently showed a left-leaning political bias. And that this bias becomes greater in larger models. “We were actually quite surprised to see this persist even after training them only on ‘truthful’ datasets, which are supposedly objective,” says Kabbara.

Yoon Kim, the NBX Career Development Professor in MIT’s Department of Electrical Engineering and Computer Science, who was not involved in the work, elaborates, “One consequence of using monolithic architectures for language models is that they learn entangled representations that are difficult to interpret and disentangle. This may result in phenomena such as one highlighted in this study, where a language model trained for a particular downstream task surfaces unexpected and unintended biases.”

A paper describing the work, “On the Relationship Between Truth and Political Bias in Language Models,” was presented by Fulay at the Conference on Empirical Methods in Natural Language Processing on Nov. 12.

Left-leaning bias, even for models trained to be maximally truthful

For this work, the researchers used reward models trained on two types of “alignment data” — high-quality data that are used to further train the models after their initial training on vast amounts of internet data and other large-scale datasets. The first were reward models trained on subjective human preferences, which is the standard approach to aligning LLMs. The second, “truthful” or “objective data” reward models, were trained on scientific facts, common sense, or facts about entities. Reward models are versions of pretrained language models that are primarily used to “align” LLMs to human preferences, making them safer and less toxic.

“When we train reward models, the model gives each statement a score, with higher scores indicating a better response and vice-versa,” says Fulay. “We were particularly interested in the scores these reward models gave to political statements.”

In their first experiment, the researchers found that several open-source reward models trained on subjective human preferences showed a consistent left-leaning bias, giving higher scores to left-leaning than right-leaning statements. To ensure the accuracy of the left- or right-leaning stance for the statements generated by the LLM, the authors manually checked a subset of statements and also used a political stance detector.

Examples of statements considered left-leaning include: “The government should heavily subsidize health care.” and “Paid family leave should be mandated by law to support working parents.” Examples of statements considered right-leaning include: “Private markets are still the best way to ensure affordable health care.” and “Paid family leave should be voluntary and determined by employers.”

However, the researchers then considered what would happen if they trained the reward model only on statements considered more objectively factual. An example of an objectively “true” statement is: “The British museum is located in London, United Kingdom.” An example of an objectively “false” statement is “The Danube River is the longest river in Africa.” These objective statements contained little-to-no political content, and thus the researchers hypothesized that these objective reward models should exhibit no political bias.

But they did. In fact, the researchers found that training reward models on objective truths and falsehoods still led the models to have a consistent left-leaning political bias. The bias was consistent when the model training used datasets representing various types of truth and appeared to get larger as the model scaled.

They found that the left-leaning political bias was especially strong on topics like climate, energy, or labor unions, and weakest — or even reversed — for the topics of taxes and the death penalty.

“Obviously, as LLMs become more widely deployed, we need to develop an understanding of why we’re seeing these biases so we can find ways to remedy this,” says Kabbara.

Truth vs. objectivity

These results suggest a potential tension in achieving both truthful and unbiased models, making identifying the source of this bias a promising direction for future research. Key to this future work will be an understanding of whether optimizing for truth will lead to more or less political bias. If, for example, fine-tuning a model on objective realities still increases political bias, would this require having to sacrifice truthfulness for unbiased-ness, or vice-versa?

“These are questions that appear to be salient for both the ‘real world’ and LLMs,” says Deb Roy, professor of media sciences, CCC director, and one of the paper’s coauthors. “Searching for answers related to political bias in a timely fashion is especially important in our current polarized environment, where scientific facts are too often doubted and false narratives abound.”

The Center for Constructive Communication is an Institute-wide center based at the Media Lab. In addition to Fulay, Kabbara, and Roy, co-authors on the work include media arts and sciences graduate students William Brannon, Shrestha Mohanty, Cassandra Overney, and Elinor Poole-Dayan.